I’m sure I’m not the only who’s done this, but have you visited a local electronics store such as a Best Buy to look at some of the latest 4K HDR TVs? While you’re browsing, you come across jargon that you don’t understand. One TV supports HDR10 while another is sporting Dolby Vision HDR at 12 bit color.

What does this all mean? If you’re still reading, you’re most likely looking for more depth on the subject. Sit back and relax; we’ve got you covered. You can also watch our guys break it down with the video below from our YouTube channel, BZBtv.

HDR vs Non-HDR

You’re all probably familiar with 4K resolution; 4K is four times the resolution of Full HD or 1080p. HDR, or high dynamic range for TVs on the other hand, is the technology that will allow your TV to display a wider range of colors and contrast. While having four times more pixels on a TV is great, it’s HDR technology that’s revolutionizing the way we watch. Contrast ratio and color accuracy are the two most important factors in how a TV looks. HDR broadens the scope of both contrast and color significantly.

Currently, most new TVs can display HDR content, which has more detail in the bright and dark areas of a video, for a higher “dynamic range” compared to non-HDR content. Everything you’ve ever watched that’s not HDR is now referred to as “SDR,” or standard dynamic range.

HDR Magnifies Color Depth

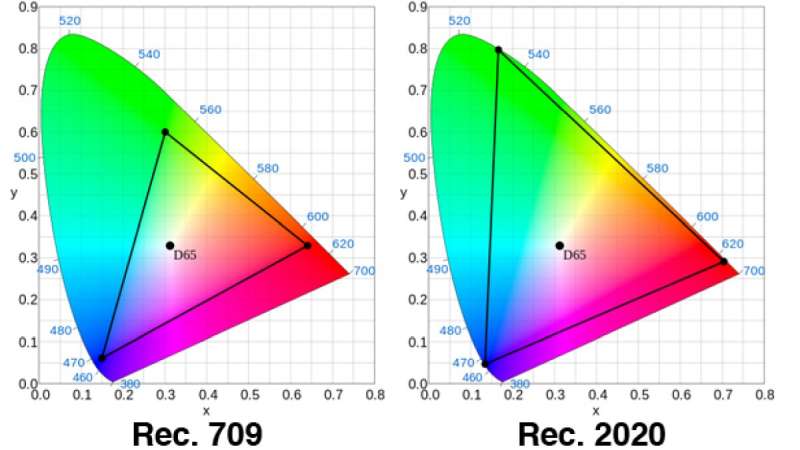

A TVs color gamut is all the colors that is possible for a TV to create. The current HDTV color standard is called Rec. 709. Unfortunately, Rec 709 is modern day black and white, and the human eye can see beyond Rec 709. To get more realistic color, there are two aspects that must be improved: color gamut and bit depth.

Rec 2020, is the color space standard defined for 10 or 12 bits for 4K and even 8K TVs. The simplest way to envision this is with a triangle. The smallest triangle is the “Rec. 709” color space. Rec. 2020 covers the entire Rec 709 color space and is much larger. The overlap may not seem much, but the difference in the possibility of colors is billions, depending on the bit depth, which we’ll cover later.

Bottom line, Rec 2020 color space can reproduce colors that cannot be shown with the Rec 709 color space. Expanding the color gamut will allow TVs to show more realistic colors.

Bit Depth = Ocean of Colors

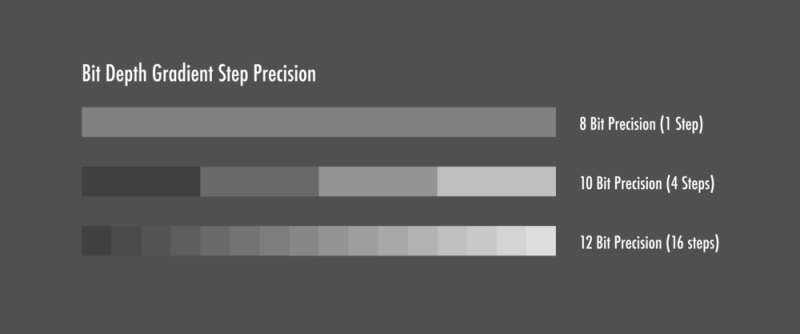

Bit depth is the other aspect, which is a bit more complicated, no pun intended. TVs don’t have an infinite color palette to choose from nor can they mix the color to create a new color. TVs are digital, and each color is represented by a value. Current HDTVs that output 1080p use an 8-bit system. What this means is, your HDTV can show you 256 possible shades of every color.

To put this into perspective, if the color red has a value of “20”, it’s a dark red that’s very close to black, but it’s still red. On the other end, if a red has a value of 240, its a very bright red. An 8-bit system has the potential of creating 16.7 million colors (256 green x 256 blue x 256 red).

10-Bit

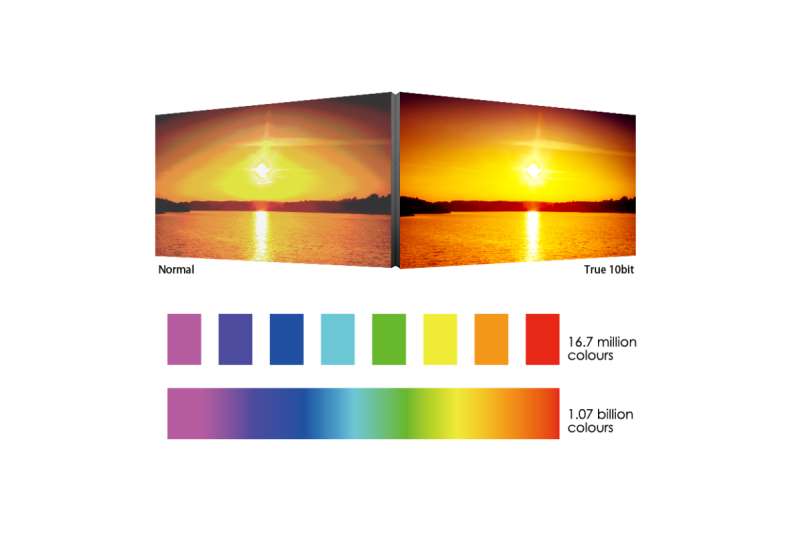

Combine greater bit depth with a wider color gamut and color realism takes a big leap. 10-bit color can represent 64x the colors of 8-bit. When you do the math, equates to over 1B colors, which is a massive amount of colors compared to 8 bit.

How this will look to you is if you are comparing two TVs, one showing an image in 8 bit and the other TV, 10 bit, the gradients in the 10 bit TV will look smooth. You won’t see the separation in color because of the range of colors a 10 bit TV can produce. The image below is a perfect example of 8-bit vs 10-bit, with the image on the left being 8-bit.

12-Bit

Even more is 12 bit, which is 64x the colors of 10-bit. Dolby Vision HDR supports 12-bit, but you’ll need a TV that can produce enough brightness to actually see the color difference from 10 bit to 12 bit.

Light Is Measured In NITS

One other aspect to consider when it comes to HDR is nits. A TV or monitor outputs light, and this is measured in nits. Now, nits has nothing to do with the brightness settings you see on monitors and TVs. A “nit” is a way to describe a brightness of 1 candela per square meter (cd/m2). A typical candle produces 1 candela.

To give you some perspective, a typical HDTV or even a PC monitor can easily do about 120 nits. A high-end monitor can double that. A movie screen in an average theater can output about 50 nits.

How about brand new 4K HDR TVs currently in the market? Well, manufacturers are using their brightness to market their TVs. Top of the line HDR capable TVs are putting out over 1500 nits. Sony recently introduced a prototype TV that is capable of 10,000 nits at CES 2018.

So, what do nits have to do with HDR? Since HDR has to do with the details in the shadows and details in the highlights. A TV that is not bright enough may not be capable of delivering true HDR content. Better-performing HDR TVs typically generate at least 600 NITs of peak brightness, while high-end 4K HDR TVs are well over 1000 NITs.

A Window To Another World

The promise of HDR is you can see what the eye can see. The ultimate goal of HDR is to make displays resemble a window into another world.

That said, 4K resolution is pretty much standard in most TVs today. However, every HDR format is a bit different, and they’re still competing against one another, especially for support from different companies.

HDR Classes

Currently, the most popular format is HDR10. HDR10 is an open standard that manufacturers can implement without any extra fees. HDR10 uses the 10-bit spec, hence the name while Dolby Vision uses 12-bit. Although Dolby Vision was introduced first, many TV manufacturers have not implemented it because there is a licence fee to use it. In terms of sheer technological might, Dolby Vision has a clear advantage, even with current TVs. Other HDR formats include HLG (Hybrid Log Gamma) Samsung’s HDR10+ and Technicolor’s Advanced HDR, are just getting started.

As I mentioned earlier, many TVs have the ability to display HDR, but there are different HDR formats out there. So, if you plan on using the HDR capability of your TV, here are the things to remember.

How To Watch HDR

It’s critical to match the HDR format your TV can support with the HDMI source. For example, if you purchase a TV that supports Dolby Vision, your external HDR source such as a 4K Ultra HD Blu-ray player must also support Dolby Vision, including the Blu ray disc. Make sure you’re using a high-speed HDMI cable, also. Lastly, make sure both TV and source support right versions of HDMI and HDCP.

If there are other devices involved, such as an HDMI extender, AV receiver, or matrix switcher, make sure those devices also support the same HDR format that your content, external source, and TV support, otherwise you’ll get downgraded to 1080p or even a blank screen. As a matter of fact, if you’re in need of an HDMI extender that supports HDR, we recently made a video.

Is HDR Worth It?

The topic of HDR can be complicated, but in theory, they can all coexist inside your TV. HDR technology is exciting and will draw us even deeper into the thrilling movies and shows we love to watch. Should your next TV be HDR compatible? Our answer is an empathic YES! Make sure the TV also offers peak brightness levels that can make HDR pop. HDR is the most significant step up to the home video viewing experience since HDTVs, and is the center of television’s future.

BZBtv

BZBtv DIY

DIY TECH TRENDS

TECH TRENDS